Field

Encryption Keys for SAAS/Cloud computing

Entry for the RSA BSafe Share security competition, June 2009.

Anthony Berglas,

Andrew Baker

Abstract

This

project demonstrates a novel mechanism that enables large numbers of

individual data fields to be encrypted without the need to

store the associated large number of encryption keys. This

has then been used to create a novel architecture that can provide real

role separation in a SAAS/Cloud computing environment. The

implementation also includes a general purpose JSSE

client/servlet

toolkit which sends property lists between authenticated clients and

servers.

The

Problem

Cloud

computing and Software As A Service (SAAS) has seen keen interest by

many enterprises. However, it raises serious

security

issues as corporations may want to store sensitive personal data in

applications which are managed by third parties.

One

way to avoid the need to trust the cloud provider is to encrypt the

information stored in the cloud, and then decrypt it on the

client. A policy engine

can then determine which fields of which records can be decrypted by

which

users.

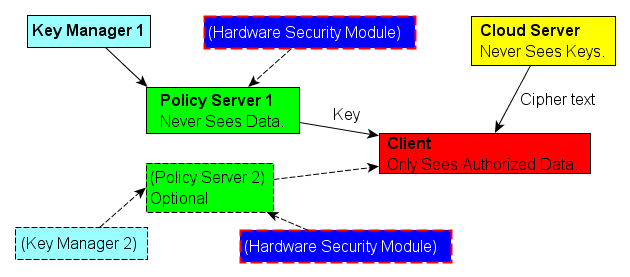

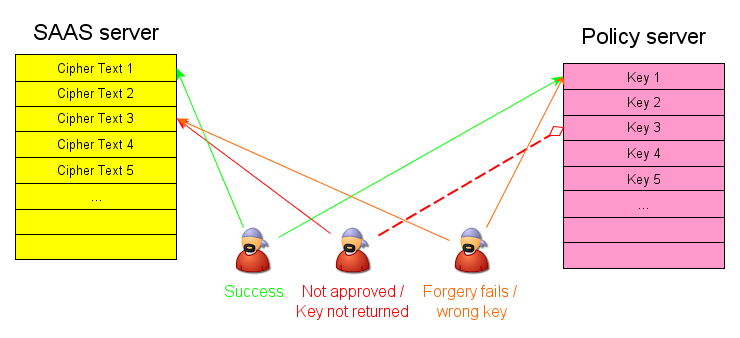

This is illustrated in the diagram above.

The Cloud Server provides the cipher text while a separate

Policy Server

provides the individual Keys. This provides real role

separation

because the Cloud should never

sees the keys, the Policy Server never sees the data, and the

client can only decrypt data that they have access to.

A Client must be authorized by both

the the Cloud and the Policy servers in order to

access both the cipher text and the encryption keys.

Different data elements may have different access rights. For

example, an Employee may be able to see all fields of their own

record, their department manager may see most fields of the

employees in their department, but only the personnel department may

see the health fields. This can be achieved by using

different Field Encryption Keys (FEK) for each protected data element.

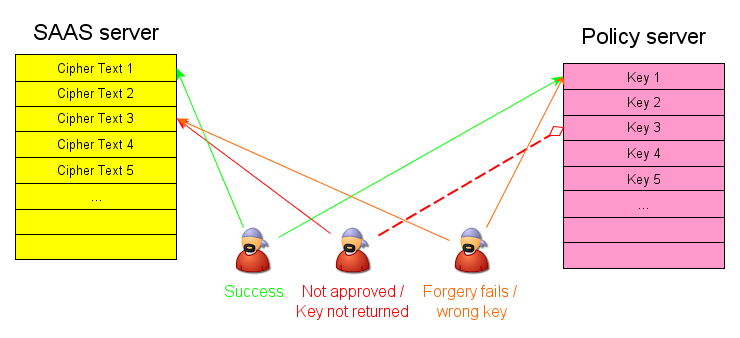

In

the example illustrated above, the user has been authorized to view

data element 1, but not element 3. So if they ask the Policy

Server for element 1's key it the request will be granted.

However, if they ask

for the key to element 3, the Policy Server will will decline the

request. Any attempt by the user to decode

element 3

with the key to element 1 will fail because element 3 was encrypted

with field 3. (This

assumes that the SAAS server has been compromized and is granting the

user access to element 3's cipher text.)

In order to facilitate a

fine

level of control,

each sensitive field of each individual record needs to be encrypted

with a

different Field Encryption Key. So if there are

1,000,000

records and 5 sensitive fields there would be 5,000,000 FEKs.

One

way to achieve that would be to store FEKs for each element of each

record in the key manager or policy engine. However, that

would require the

key manager or policy engine to have a very large and volatile database

which is

not practical.

Another way would be to wrap the FEKs

with a master Key Encrypting Key (KEK) and store the wrapped key with

each record on the

Server. The Client could then pass the wrapped FEKs to the

policy

engine for unwrapping. However, the need to store the wrapped

keys would add considerable overhead to the storage of the records if

small fields are used.

Further,

in either

of the above approaches the policy engine needs to be able to stop the

client from obtaining the FEK of a field to which it does not

have

access to. This means that signed policy data such as the

employee number and department number need to stored with each

individual wrapped FEK. An integrity digest should also be

stored. These would make the overhead much larger

than 16

bytes for each encrypted field in each

record. Thus

in a

realistic system the key and policy data could end up being much larger

than the plain text that is being protected.

The Solution

We

have a different approach which avoids the need to store the FEKs

anywhere. We do this by enabling the policy engine that can

securely (re)generate the FEKs each time that they are required.

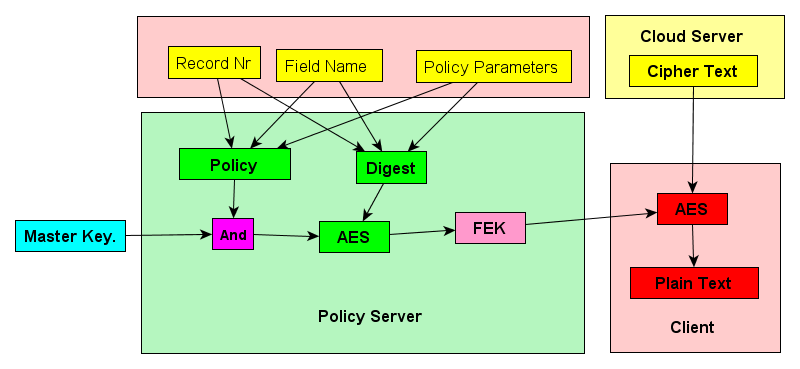

This

is

achieved by encrypting a digest of the record number, field name and

other policy parameters, and using the resultin cipher text as the FEK.

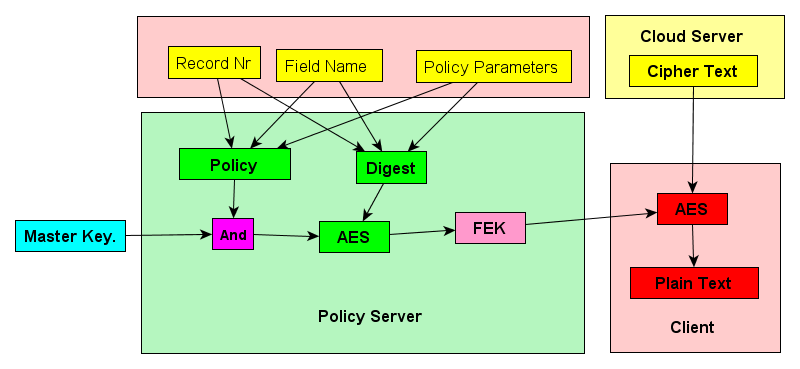

This is illustrated in the diagram above. The client

retrieves an encrypted field of a

particular record from the server. The client also

retrieves

the Field Encrypting Key from the Policy Server, so that it can decrypt

just that field of that record. The policy server examines

Client

user's credentials to authorize the access. It then encrypts

a

digest of the Record Nr and Field Name with the Master Key that is

stored in the Key Manager. This results in a unpredictable

series

of bits which is then used as the Field Encryption Key.

The

Policy Server actually obtains the Record Nr, Field Name and Policy

Parameters (such as Department Id) directly from the client.

A

hostile client could forge these values in order to mislead a Policy

Server to provide it with a FEK to which it is not authorized.

However, the resulting keys would not be useful

because the

Digest includes all the parameters used to make the policy decision.

Thus the Policy Server would return a FEK, but it

would not

be the one required to decrypt the cipher text. (If a policy

parameter such as Department Id were to change then all fields on the

relevant record would need to be re-encrypted.)

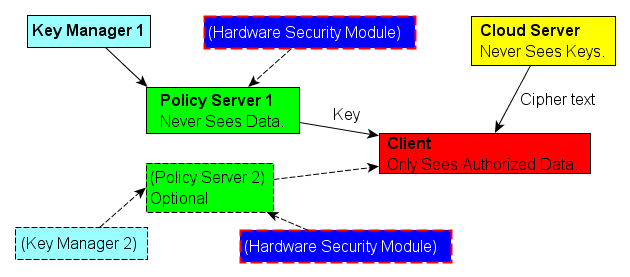

In a full system

there is no need to rely on a single master key. For added

security different master keys could protect

different types

of information. For greater protection the

Master Keys could be stored in a Hardware Security Module that is

attached to the Policy Server. This would mean that the

Policy Server

itself never sees the unwrapped master key. Further, multiple

key managers and policy

servers

could also be provided, with the FEK be the XOR of the keys returned by

each one. Thus an attacker would have to defeat the Cloud

Server

(to obtain access to the cipher text) plus all of

the Policy Servers.

The actual policies as to which users can access which data

should be implemented by both the Cloud Server and Policy

Server.

The Policy Server cannot ask the Cloud Server for policy

information, as that would defeat the role separation.

However,

depending on the application, the task of maintaining the policy could

be simplified by having either the Policy Server or the Cloud Server

implement a simplified, more liberal set of policies. Many

architectural variations on this theme are possible.

Thus

the novel arrangement provided by this submission provides a high

degree of protection

without the

performance overheads of the key storage. The fact that the

Field Encryption Keys

need not be stored enables a large number of them to be used in

practice. This in turn enables fine grained

encryption which

can be

tightly coupled to authorization policy. Perhaps

more

importantly, FEKs that are

never stored can never be lost, so it greatly improves the integrity of

the

system.

Implementation

Architecture

There are four main modules in the system, each stored in a different

Java Package. They are:-

- The Client, which processes the plain text.

- The Cloud Server, which never sees sensitive plain text.

- The Policy Server, which never sees sensitive cipher text.

This module includes a trivial Key Manager.

- The SSL module, which provides a general purpose, fully

authenticated Suite B SSL toolkit.

The

ant task runs the Client, Cloud and Policy Servers which communicate

via SSL. For convenience they actually run in the same

process,

but still communicate via SSL. It is also easy to run them in

separate processes or separate machines by simply running the Main

classes in each of the Client, Cloud and Policy servers. And

for

development, they can be run in a single process with direct

communication without SSL. See

crytpo.rsacomp.Main for details.

Field

Encryption Keys

The

Client authenticates with the Cloud and Policy servers using a client

certificate. The Policy server then uses this certificate's

Subject to

index into a simple authorization database of users. For the

purposes

of this demonstration, the Cloud server performs no authorization in

order to be able to demonstrate the use of encryption to provide

security.

The Policy Server then calculates the FEK using a SHA256 digest of

the Field Name, Record Id and Department Id. The result is

then

folded into 16 bytes and encrypted with the master key and AES128.

This result is then returned this to the client as the FEK.

Including the Department Id in the digest enables it to be

securely used in policies such as Managers can see the Salaries of all

employees within their department.

The

clients then decrypt the fields to which they have authorized access.

The encrypted fields include a 4 byte folding of a SHA256 of

the

plain text to ensure the integrity of the data.

Note

also the way that the Employee record has been defined, using the Field

class. This generalized approach is similar to what is used

in

simpleorm.org, and provides flexibility with simplicity and no

reflection. Methods such as getName

could obveiously be added to make Employee look like a POJO externally.

Java

Secure Socket Extension (JSSE)

The

secure socket module implements Suite B encryption using the BSafe

Share for Java library. It

provides

an SSLServlet abstract class that is similar to HTTPServlet except that

a new instance is created for each request (as it should be for

HTTPServlet!). The server provides concurrent requests using

a

thread pool, by default four threads are enabled.

The module

sends java.util.Properties between the client and server. It

does

not implement HTTP, but rather simply terminates each message with a

null byte. This is safe as unloaded Properties are always

pure

ASCII, with a special \uXXXX notation used for special characters (as

tested by SSLMain). A maximum message size is imposed,

by

default 10,000,000 bytes. No attempt is made to reuse sockets

for

multiple messages, although sessions are reused correctly.

We

would suggest that the JSSE examples in the J/Share package also be

shown in this form. The inverted SLLServlet structure is

almost

certainly more useful to the end user, and does not add much overhead

in practice. (We would also suggest that HTTP be properly

implemented rather than the null byte terminator,

we just did not need HTTP for our example.)

Security

Analysis

A

secure block cipher must provide total Diffusion and Confusion.

The Diffusion property means that the cipher text used for

the

FEK is totally unpredictable even though an attacker knows the exact

plain text. Thus it is easy to show that

the

basic FEK mechanism is secure provided that AES is secure.

(It

would also be possible to skip the AES encryption One

would just add the Master Key to the inputs of the SHA256

digest,

and then use the output of the digest as the FEK. But the two

step process enables the AES phase to be performed in a separate HSM,

and so better protect the master key. Further, the focus of

digest cryptanalysis is on collisions, while the focus on cypers is

upon key recovery, so AES feels slightly more secure.)

We do not

use a (non-trivial) explicit Initialization Vectors (IV) for either the

Master Key or the FEKs. For the master key there is no point,

as

there would only ever be one IV for all the FEKs. (In a more

complete solution we might use a number of Master Keys for different

types of FEKs. But each master key would still only have one

IV.)

We do not use or store an IV for the FEKs

because each

field of each record is encrypted with a

different FEK. The only weakness is that

the same FEK is used at different

points in time. Thus if an attacker knew the specific values

of

the cipher text / plain text pair of a specific field on a specific

record, they could then determine if that field of that record was ever

set back to the same specific value. This is a very obscure

leakage which would not normally justify an IV.

(Recall that knowing the values of one record's field provides

no information

about a different record. Further, the 4 byte

integrity

digest is prepended to the plain text. In combination with

CBC

mode this essentially uses the entire value of field itself as an IV.)

For

greater security an IV could easily be included, we just have made a

business decission not to in our example. A different one

could

be used for each field, or one could be shared for all fields in a

record. Because of the local scope of the FEK, a simple

counter

would suffice. Storing an IV would also effectively shred the

FEKs each time the data is (re)encrypted, which could be useful if a

user's access rights are reduced.

If an IV is not used then we cannot use a stream

cipher such as

Galois Counter Mode for. If we did, then an attacker that

knew one plain

text / cipher text pair for a specific field in a specific

record could

easily decipher any new value for that specific field in that specific

record. CBC mode

is largely immune from that attack, and is completely immune given the

prepended 4 byte integrity digest.

(An insecure alternative would be to use the integrity digest as an

explicit IV,

and then a stream cipher could be used on the rest of the plain text.

However, that would enable an attacker to brute force the

plain text against the exposed digest.)

A further refinement would be to encrypt small fields

without needing to pad blocks.

It

would be good if J/Safe could provide a variable block size algorithm.

A number are published, but are not Suite B.

However, it is

easy to construct a variable block cipher using AES or SHA256 and a

single stage Feistel network.

Conclusion

Cloud

computing and Software As A Service provides new challenges for data

protection. Field Encryption Keys enable real role separation

between the Cloud Server and the Policy Server. However, the

large number of FEKs that would be required would not be practical

without our novel approach to which avoids the need to store them

explicitly.

We have provided a sound proof of concept of the

practical use of FEKs. We have also produced a generic JSSE

server

package, and demonstrated the use of client certificates for

authentication.

In combination these provide a solid platform for practical

applications to be built that utilize this innovative technology.

End.